• Lex is a tool in lexical analysis phase to recognize tokens using regular expression.

• Lex tool itself is a lex compiler.

We’ll be covering the following topics in this tutorial:

Use of Lex

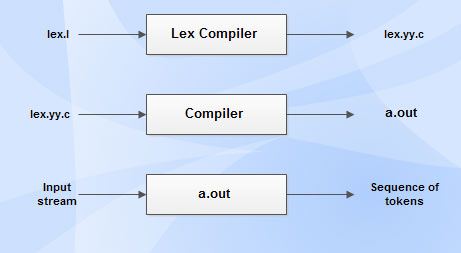

• lex.l is an a input file written in a language which describes the generation of lexical analyzer. The lex compiler transforms lex.l to a C program known as lex.yy.c.

• lex.yy.c is compiled by the C compiler to a file called a.out.

• The output of C compiler is the working lexical analyzer which takes stream of input characters and produces a stream of tokens.

• yylval is a global variable which is shared by lexical analyzer and parser to return the name and an attribute value of token.

• The attribute value can be numeric code, pointer to symbol table or nothing.

• Another tool for lexical analyzer generation is Flex.

Structure of Lex Programs

Lex program will be in following form

declarations

%%

translation rules

%%

auxiliary functions

Declarations This section includes declaration of variables, constants and regular definitions.

Translation rules It contains regular expressions and code segments.

Form : Pattern {Action}

Pattern is a regular expression or regular definition.

Action refers to segments of code.

Auxiliary functions This section holds additional functions which are used in actions. These functions are compiled separately and loaded with lexical analyzer.

Lexical analyzer produced by lex starts its process by reading one character at a time until a valid match for a pattern is found.

Once a match is found, the associated action takes place to produce token.

The token is then given to parser for further processing.

Conflict Resolution in Lex

Conflict arises when several prefixes of input matches one or more patterns. This can be resolved by the following:

• Always prefer a longer prefix than a shorter prefix.

• If two or more patterns are matched for the longest prefix, then the first pattern listed in lex program is preferred.

Lookahead Operator

• Lookahead operator is the additional operator that is read by lex in order to distinguish additional pattern for a token.

• Lexical analyzer is used to read one character ahead of valid lexeme and then retracts to produce token.

• At times, it is needed to have certain characters at the end of input to match with a pattern. In such cases, slash (/) is used to indicate end of part of pattern that matches the lexeme.

(eg.) In some languages keywords are not reserved. So the statements

IF (I, J) = 5 and IF(condition) THEN

results in conflict whether to produce IF as an array name or a keyword. To resolve this the lex rule for keyword IF can be written as,

IF/\ (.* \) {

letter }

Design of Lexical Analyzer

• Lexical analyzer can either be generated by NFA or by DFA.

• DFA is preferable in the implementation of lex.

Structure of Generated Analyzer

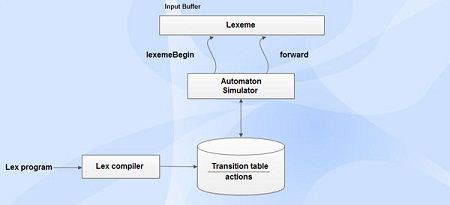

Architecture of lexical analyzer generated by lex is given in Fig.

Lexical analyzer program includes:

• Program to simulate automata

• Components created from lex program by lex itself which are listed as follows:

o A transition table for automaton.

o Functions that are passed directly through lex to the output.

o Actions from input program (fragments of code) which are invoked by automaton simulator when needed.

Steps to construct automaton

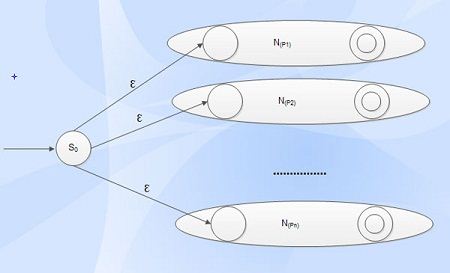

Step 1: Convert each regular expression into NFA either by Thompson’s subset construction or Direct Method.

Step 2: Combine all NFAs into one by introducing new start state with s-transitions to each of start states of NFAs Ni for pattern Pi·

Step 2 is needed as the objective is to construct single automaton to recognize lexemes that matches with any of the patterns.

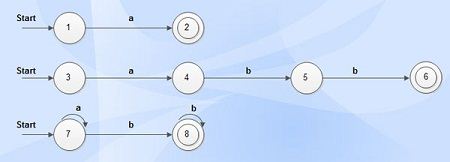

(eg.) a {action A1 for pattern Pl}

abb { action A2 for pattern P2 }

a*b+ { action A3 for pattern P3 }

For string obb, pattern P2 and pattern p3 matches. But the pattern P2 will be taken into account as it was listed first in lex program.

For string aabbb · · · , matches pattern p3 as it has many prefixes.

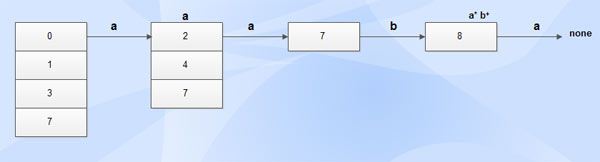

Fig. Shows NFAs for recognizing the above mentioned three patterns.

The combined NFA for all three given patterns is shown in Fig.

Pattern Matching Based on NFAs

Lexical analyzer reads input from input buffer from the beginning of lexeme pointed by the pointer lexemeBegin. Forward pointer is used to move ahead of input symbols, calculates the set of states it is in at each point. If NFA simulation has no next state for some input symbol, then there will be no longer prefix which reaches the accepting state exists. At such cases, the decision will be made on the so seen longest prefix i.e., lexeme matching some pattern. Process is repeated until one or more accepting states are reached. If there are several accepting states, then the pattern Pi which appears earliest in the list of lex program is chosen.

e.g.

W= aaba

Explanation

Process starts with s-closure of initial state 0. After processing all the input symbols, no state is found as there is no transition out of state 8 on input a. Hence, look for accepting state by retracting to previous state. From Fig. state 2 which is an accepting state is reached after reading input symbol a and therefore the pattern a has been matched. At state 8, string aab has been matched with pattern avb”: By Lex rule, the longest matching prefix should be considered. Hence, action Ag corresponds to pattern p3 will be executed for the string aab.

DFAs for Lexical Analyzers

DFAs are also used to represent the output oflex. DFA is constructed from NFA, by converting all the patterns into equivalent DFA using subset construction algorithm. If there are one or more accepting NFA states, the first pattern whose accepting state is represented in each DFA state is determined and displayed as output of DFA state. Process of DFA is similar to that of NFA. Simulation of DFA is continued until no next state is found. Then retraction takes place to find the accepting state of DFA. Action associated with the pattern for that state is executed.

Implementing Lookahead Operator

Lookahead operator r1/r2 is needed because the pattern r1 for a particular token may need to describe some trailing context r2 in order to correctly identify the actual lexeme.

For the pattern r1/r2, ‘/’ is treated as Ɛ.

If some prefix ab, is recognized by NFA as a match for regular expression then the lexeme is not ended as NFA reaches the accepting state.

The end of lexeme occurs when NFA enters a state p such that

• p has an Ɛ -transition on I,

• There is a path from start state to state p, that spells out a.

• There is a path from state p to accepting state that spells out b.

• a is as Jong as possible for any ab satisfying conditions 1 – 3.

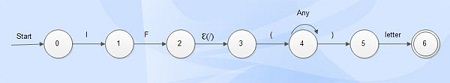

Figure shows the NFA for recognizing the keyword IF with lookahead. Transition from state 2 to state 3 represents the lookahead operator (-transition).

Accepting state is state 6, which indicates the presence of keyword IF. Hence, the lexeme IF is found by looking backwards to the state 2, whenever accepting state (state 6) is reached.

Dinesh Thakur holds an B.C.A, MCDBA, MCSD certifications. Dinesh authors the hugely popular

Dinesh Thakur holds an B.C.A, MCDBA, MCSD certifications. Dinesh authors the hugely popular