Lexical analysis is the process of converting a sequence of characters from source program into a sequence of tokens.

A program which performs lexical analysis is termed as a lexical analyzer (lexer), tokenizer or scanner.

Lexical analysis consists of two stages of processing which are as follows:

• Scanning

• Tokenization

We’ll be covering the following topics in this tutorial:

Token, Pattern and Lexeme

Token

Token is a valid sequence of characters which are given by lexeme. In a programming language,

• keywords,

• constant,

• identifiers,

• numbers,

• operators and

• punctuations symbols

are possible tokens to be identified.

Pattern

Pattern describes a rule that must be matched by sequence of characters (lexemes) to form a token. It can be defined by regular expressions or grammar rules.

Lexeme

Lexeme is a sequence of characters that matches the pattern for a token i.e., instance of a

token.

(eg.) c=a+b*5;

Lexemes and tokens

Lexemes | Tokens |

c | identifier |

= | assignment symbol |

a | identifier |

+ | + (addition symbol) |

b | identifier |

* | * (multiplication symbol) |

5 | 5 (number) |

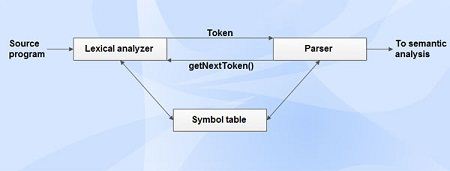

The sequence of tokens produced by lexical analyzer helps the parser in analyzing the syntax of programming languages.

Role of Lexical Analyzer

Lexical analyzer performs the following tasks:

• Reads the source program, scans the input characters, group them into lexemes and produce the token as output.

• Enters the identified token into the symbol table.

• Strips out white spaces and comments from source program.

• Correlates error messages with the source program i.e., displays error message with its occurrence by specifying the line number.

• Expands the macros if it is found in the source program.

Tasks of lexical analyzer can be divided into two processes:

Scanning: Performs reading of input characters, removal of white spaces and comments.

Lexical Analysis: Produce tokens as the output.

Need of Lexical Analyzer

Simplicity of design of compiler The removal of white spaces and comments enables the syntax analyzer for efficient syntactic constructs.

Compiler efficiency is improved Specialized buffering techniques for reading characters speed up the compiler process.

Compiler portability is enhanced

Issues in Lexical Analysis

Lexical analysis is the process of producing tokens from the source program. It has the following issues:

• Lookahead

• Ambiguities

Lookahead

Lookahead is required to decide when one token will end and the next token will begin. The simple example which has lookahead issues are i vs. if, = vs. ==. Therefore a way to describe the lexemes of each token is required.

A way needed to resolve ambiguities

• Is if it is two variables i and f or if?

• Is == is two equal signs =, = or ==?

• arr(5, 4) vs. fn(5, 4) II in Ada (as array reference syntax and function call syntax are similar.

Hence, the number of lookahead to be considered and a way to describe the lexemes of each token is also needed.

Regular expressions are one of the most popular ways of representing tokens.

Ambiguities

The lexical analysis programs written with lex accept ambiguous specifications and choose the longest match possible at each input point. Lex can handle ambiguous specifications. When more than one expression can match the current input, lex chooses as follows:

• The longest match is preferred.

• Among rules which matched the same number of characters, the rule given first is preferred.

Lexical Errors

• A character sequence that cannot be scanned into any valid token is a lexical error.

• Lexical errors are uncommon, but they still must be handled by a scanner.

• Misspelling of identifiers, keyword, or operators are considered as lexical errors.

Usually, a lexical error is caused by the appearance of some illegal character, mostly at the beginning of a token.

Error Recovery Schemes

• Panic mode recovery

• Local correction

o Source text is changed around the error point in order to get a correct text.

o Analyzer will be restarted with the resultant new text as input.

• Global correction

o It is an enhanced panic mode recovery.

o Preferred when local correction fails.

Panic mode recovery

In panic mode recovery, unmatched patterns are deleted from the remaining input, until the lexical analyzer can find a well-formed token at the beginning of what input is left.

(eg.) For instance the string fi is encountered for the first time in a C program in the context:

fi (a== f(x))

A lexical analyzer cannot tell whether f iis a misspelling of the keyword if or an undeclared function identifier.

Since f i is a valid lexeme for the token id, the lexical analyzer will return the token id to the parser.

Local correction

Local correction performs deletion/insertion and/or replacement of any number of symbols in the error detection point.

(eg.) In Pascal, c[i] ‘=’; the scanner deletes the first quote because it cannot legally follow the closing bracket and the parser replaces the resulting’=’ by an assignment statement.

Most of the errors are corrected by local correction.

(eg.) The effects of lexical error recovery might well create a later syntax error, handled by the parser. Consider

· · · for $tnight · · ·

The $ terminates scanning of for. Since no valid token begins with $, it is deleted. Then tnight is scanned as an identifier.

In effect it results,

· · · fortnight · · ·

Which will cause a syntax error? Such false errors are unavoidable, though a syntactic error-repair may help.

Lexical error handling approaches

Lexical errors can be handled by the following actions:

• Deleting one character from the remaining input.

• Inserting a missing character into the remaining input.

• Replacing a character by another character.

• Transposing two adjacent characters.

Dinesh Thakur holds an B.C.A, MCDBA, MCSD certifications. Dinesh authors the hugely popular

Dinesh Thakur holds an B.C.A, MCDBA, MCSD certifications. Dinesh authors the hugely popular