Since large drawings cannot fit in their entirety on display screens, they can either be compressed to fit, thereby obscuring details and creating clutter, or only a portion of the total drawing can be displayed. The portion of a 2D or 3D object to be displayed is chosen through specification of a rectangular window that limits what part of the drawing can be seen.

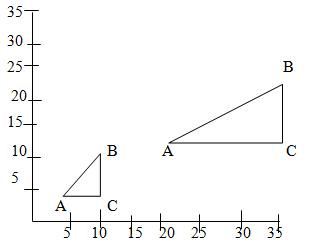

A 2D window is usually defined by choosing a maximum and minimum value for its x- and y-coordinates, or by specifying the center of the window and giving its maximum relative height and width. Simple subtractions or comparisons suffice to determine whether a point is in view. For lines and polygons, a clipping operation is performed that discards those parts that fall outside of the window. Only those parts that remain inside the window are drawn or otherwise rendered to make them visible.