Measurement is done by metrics. Three parameters are measured: process measurement through process metrics, product measurement through product metrics, and project measurement through project metrics.

Process metrics assess the effectiveness and quality of software process, determine maturity of the process, effort required in the process, effectiveness of defect removal during development, and so on. Product metrics is the measurement of work product produced during different phases of software development. Project metrics illustrate the project characteristics and their execution.

We’ll be covering the following topics in this tutorial:

Process Metrics

To improve any process, it is necessary to measure its specified attributes, develop a set of meaningful metrics based on these attributes, and then use these metrics to obtain indicators in order to derive a strategy for process improvement.

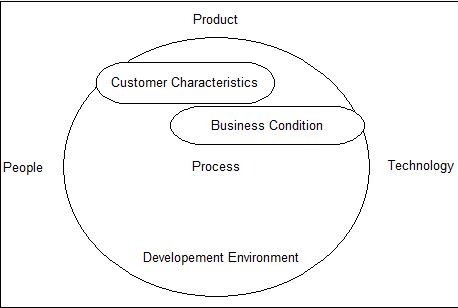

Using software process metrics, software engineers are able to assess the efficiency of the software process that is performed using the process as a framework. Process is placed at the centre of the triangle connecting three factors (product, people, and technology), which have an important influence on software quality and organization performance. The skill and motivation of the people, the complexity of the product and the level of technology used in the software development have an important influence on the quality and team performance. The process triangle exists within the circle of environmental conditions, which includes development environment, business conditions, and customer /user characteristics.

To measure the efficiency and effectiveness of the software process, a set of metrics is formulated based on the outcomes derived from the process. These outcomes are listed below.

- Number of errors found before the software release

- Defect detected and reported by the user after delivery of the software

- Time spent in fixing errors

- Work products delivered

- Human effort used

- Time expended

- Conformity to schedule

- Wait time

- Number of contract modifications

- Estimated cost compared to actual cost.

Note that process metrics can also be derived using the characteristics of a particular software engineering activity. For example, an organization may measure the effort and time spent by considering the user interface design.

It is observed that process metrics are of two types, namely, private and public. Private Metrics are private to the individual and serve as an indicator only for the specified individual(s). Defect rates by a software module and defect errors by an individual are examples of private process metrics. Note that some process metrics are public to all team members but private to the project. These include errors detected while performing formal technical reviews and defects reported about various functions included in the software.

Public metrics include information that was private to both individuals and teams. Project-level defect rates, effort and related data are collected, analyzed and assessed in order to obtain indicators that help in improving the organizational process performance.

Process Metrics Etiquette

Process metrics can provide substantial benefits as the organization works to improve its process maturity. However, these metrics can be misused and create problems for the organization. In order to avoid this misuse, some guidelines have been defined, which can be used both by managers and software engineers. These guidelines are listed below.

$1· Rational thinking and organizational sensitivity should be considered while analyzing metrics data.

$1· Feedback should be provided on a regular basis to the individuals or teams involved in collecting measures and metrics.

$1· Metrics should not appraise or threaten individuals.

$1· Since metrics are used to indicate a need for process improvement, any metric indicating this problem should not be considered harmful.

$1· Use of single metrics should be avoided.

As an organization becomes familiar with process metrics, the derivation of simple indicators leads to a stringent approach called Statistical Software Process Improvement (SSPI). SSPI uses software failure analysis to collect information about all errors (it is detected before delivery of the software) and defects (it is detected after software is delivered to the user) encountered during the development of a product or system.

Product Metrics

In software development process, a working product is developed at the end of each successful phase. Each product can be measured at any stage of its development. Metrics are developed for these products so that they can indicate whether a product is developed according to the user requirements. If a product does not meet user requirements, then the necessary actions are taken in the respective phase.

Product metrics help software engineer to detect and correct potential problems before they result in catastrophic defects. In addition, product metrics assess the internal product attributes in order to know the efficiency of the following.

- Analysis, design, and code model

- Potency of test cases

- Overall quality of the software under development.

Various metrics formulated for products in the development process are listed below.

- Metrics for analysis model: These address various aspects of the analysis model such as system functionality, system size, and so on.

- Metrics for design model: These allow software engineers to assess the quality of design and include architectural design metrics, component-level design metrics, and so on.

- Metrics for source code: These assess source code complexity, maintainability, and other characteristics.

- Metrics for testing: These help to design efficient and effective test cases and also evaluate the effectiveness of testing.

- Metrics for maintenance: These assess the stability of the software product.

Metrics for the Analysis Model

There are only a few metrics that have been proposed for the analysis model. However, it is possible to use metrics for project estimation in the context of the analysis model. These metrics are used to examine the analysis model with the objective of predicting the size of the resultant system. Size acts as an indicator of increased coding, integration, and testing effort; sometimes it also acts as an indicator of complexity involved in the software design. Function point and lines of code are the commonly used methods for size estimation.

Function Point (FP) Metric

The function point metric, which was proposed by A.J Albrecht, is used to measure the functionality delivered by the system, estimate the effort, predict the number of errors, and estimate the number of components in the system. Function point is derived by using a relationship between the complexity of software and the information domain value. Information domain values used in function point include the number of external inputs, external outputs, external inquires, internal logical files, and the number of external interface files.

Lines of Code (LOC)

Lines of code (LOC) is one of the most widely used methods for size estimation. LOC can be defined as the number of delivered lines of code, excluding comments and blank lines. It is highly dependent on the programming language used as code writing varies from one programming language to another. Fur example, lines of code written (for a large program) in assembly language are more than lines of code written in C++.

From LOC, simple size-oriented metrics can be derived such as errors per KLOC (thousand lines of code), defects per KLOC, cost per KLOC, and so on. LOC has also been used to predict program complexity, development effort, programmer performance, and so on. For example, Hasltead proposed a number of metrics, which are used to calculate program length, program volume, program difficulty, and development effort.

Metrics for Specification Quality

To evaluate the quality of analysis model and requirements specification, a set of characteristics has been proposed. These characteristics include specificity, completeness, correctness, understandability, verifiability, internal and external consistency, &achievability, concision, traceability, modifiability, precision, and reusability.

Most of the characteristics listed above are qualitative in nature. However, each of these characteristics can be represented by using one or more metrics. For example, if there are nr requirements in a specification, then nr can be calculated by the following equation.

nr =nf +nrf

Where

nf = number of functional requirements

nnf = number of non-functional requirements.

In order to determine the specificity of requirements, a metric based on the consistency of the reviewer’s understanding of each requirement has been proposed. This metric is represented by the following equation.

Q1 = nui/nr

Where

nui = number of requirements for which reviewers have same understanding

Q1 = specificity.

Ambiguity of the specification depends on the value of Q. If the value of Q is close to 1 then the probability of having any ambiguity is less.

Completeness of the functional requirements can be calculated by the following equation.

Q2 = nu / [nj*ns]

Where

nu = number of unique function requirements

ni = number of inputs defined by the specification

ns = number of specified state.

Q2 in the above equation considers only functional requirements and ignores non-functional requirements. In order to consider non-functional requirements, it is necessary to consider the degree to which requirements have been validated. This can be represented by the following equation.

Q3 = nc/ [nc + nnv]

Where

nc= number of requirements validated as correct

nnv= number of requirements, which are yet to be validated.

Metrics for Software Design

The success of a software project depends largely on the quality and effectiveness of the software design. Hence, it is important to develop software metrics from which meaningful indicators can be derived. With the help of these indicators, necessary steps are taken to design the software according to the user requirements. Various design metrics such as architectural design metrics, component-level design metrics, user-interface design metrics, and metrics for object-oriented design are used to indicate the complexity, quality, and so on of the software design.

Architectural Design Metrics

These metrics focus on the features of the program architecture with stress on architectural structure and effectiveness of components (or modules) within the architecture. In architectural design metrics, three software design complexity measures are defined, namely, structural complexity, data complexity, and system complexity.

In hierarchical architectures (call and return architecture), say module ‘j’, structural complexity is calculated by the following equation.

S(j) =f2 out(j)

Where

f out(j) = fan-out of module ‘j’ [Here, fan-out means number of modules that are subordinating module j].

Complexity in the internal interface for a module ‘j’ is indicated with the help of data complexity, which is calculated by the following equation.

D(j) = V(j) / [fout(j)+l]

Where

V(j) = number of input and output variables passed to and from module ‘j’.

System complexity is the sum of structural complexity and data complexity and is calculated by the following equation.

C(j) = S(j) + D(j)

The complexity of a system increases with increase in structural complexity, data complexity, and system complexity, which in turn increases the integration and testing effort in the later stages.

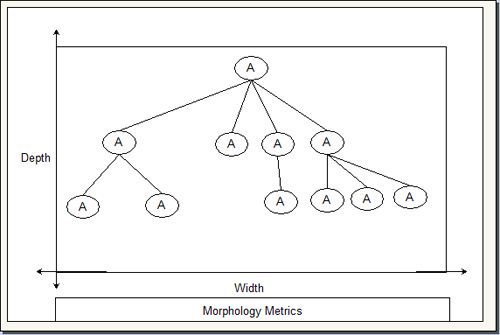

In addition, various other metrics like simple morphology metrics are also used. These metrics allow comparison of different program architecture using a set of straightforward dimensions. A metric can be developed by referring to call and return architecture. This metric can be defined by the following equation.

Size = n+a

Where

n = number of nodes

a= number of arcs.

For example, there are 11 nodes and 10 arcs. Here, Size can be calculated by the following equation.

Size = n+a = 11+10+21.

Depth is defined as the longest path from the top node (root) to the leaf node and width is defined as the maximum number of nodes at any one level.

Coupling of the architecture is indicated by arc-to-node ratio. This ratio also measures the connectivity density of the architecture and is calculated by the following equation.

r=a/n

Quality of software design also plays an important role in determining the overall quality of the software. Many software quality indicators that are based on measurable design characteristics of a computer program have been proposed. One of them is Design Structural Quality Index (DSQI), which is derived from the information obtained from data and architectural design. To calculate DSQI, a number of steps are followed, which are listed below.

1. To calculate DSQI, the following values must be determined.

- Number of components in program architecture (S1)

- Number of components whose correct function is determined by the Source of input data (S2)

- Number of components whose correct function· depends on previous processing (S3)

- Number of database items (S4)

- Number of different database items (S5)

- Number of database segments (S6)

- Number of components having single entry and exit (S7).

2. Once all the values from S1 to S7 are known, some intermediate values are calculated, which are listed below.

Program structure (D1): If discrete methods are used for developing architectural design then D1= 1, else D1 = 0

Module independence (D2): D2 = 1-(S2/S1)

Modules not dependent on prior processing (D3): D3 = 1-(S3/S1)

Database size (D4): D4 = 1-(S5/S4)

Database compartmentalization (D5):D5 = 1-(S6/S4)

Module entrance/exit characteristic (D6): D6 = 1-(S7/S1)

3. Once all the intermediate values are calculated, OSQI is calculated by the following equation.

DSQI = ∑WiDi

Where

i = 1 to 6

∑Wi = 1 (Wi is the weighting of the importance of intermediate values).

In conventional software, the focus of component – level design metrics is on the internal characteristics of the software components; The software engineer can judge the quality of the component-level design by measuring module cohesion, coupling and complexity; Component-level design metrics are applied after procedural design is final. Various metrics developed for component-level design are listed below.

- Cohesion metrics: Cohesiveness of a module can be indicated by the definitions of the following five concepts and measures.

- Data slice: Defined as a backward walk through a module, which looks for values of data that affect the state of the module as the walk starts

- Data tokens: Defined as a set of variables defined for a module

- Glue tokens: Defined as a set of data tokens, which lies on one or more data slice

- Superglue tokens: Defined as tokens, which are present in every data slice in the module

- Stickiness: Defined as the stickiness of the glue token, which depends on the number of data slices that it binds.

- Coupling Metrics: This metric indicates the degree to which a module is connected to other modules, global data and the outside environment. A metric for module coupling has been proposed, which includes data and control flow coupling, global coupling, and environmental coupling.

- Measures defined for data and control flow coupling are listed below.

di = total number of input data parameters

ci = total number of input control parameters

do= total number of output data parameters

co= total number of output control parameters

$1§ Measures defined for global coupling are listed below.

gd= number of global variables utilized as data

gc = number of global variables utilized as control

$1§ Measures defined for environmental coupling are listed below.

w = number of modules called

r = number of modules calling the modules under consideration

By using the above mentioned measures, module-coupling indicator (mc) is calculated by using the following equation.

mc = K/M

Where

K = proportionality constant

M = di + (a*ci) + do+ (b*co)+ gd+ (c*gc) + w + r.

Note that K, a, b, and c are empirically derived. The values of mc and overall module coupling are inversely proportional to each other. In other words, as the value of mc increases, the overall module coupling decreases.

Complexity Metrics: Different types of software metrics can be calculated to ascertain the complexity of program control flow. One of the most widely used complexity metrics for ascertaining the complexity of the program is cyclomatic complexity.

Many metrics have been proposed for user interface design. However, layout appropriateness metric and cohesion metric for user interface design are the commonly used metrics. Layout Appropriateness (LA) metric is an important metric for user interface design. A typical Graphical User Interface (GUI) uses many layout entities such as icons, text, menus, windows, and so on. These layout entities help the users in completing their tasks easily. In to complete a given task with the help of GUI, the user moves from one layout entity to another.

Appropriateness of the interface can be shown by absolute and relative positions of each layout entities, frequency with which layout entity is used, and the cost of changeover from one layout entity to another.

Cohesion metric for user interface measures the connection among the onscreen contents. Cohesion for user interface becomes high when content presented on the screen is from a single major data object (defined in the analysis model). On the other hand, if content presented on the screen is from different data objects, then cohesion for user interface is low.

In addition to these metrics, the direct measure of user interface interaction focuses on activities like measurement of time required in completing specific activity, time required in recovering from an error condition, counts of specific operation, text density, and text size. Once all these measures are collected, they are organized to form meaningful user interface metrics, which can help in improving the quality of the user interface.

Metrics for Object-oriented Design

In order to develop metrics for object-oriented (OO) design, nine distinct and measurable characteristics of OO design are considered, which are listed below.

- Complexity: Determined by assessing how classes are related to each other

- Coupling: Defined as the physical connection between OO design elements

- Sufficiency: Defined as the degree to which an abstraction possesses the features required of it

- Cohesion: Determined by analyzing the degree to which a set of properties that the class possesses is part of the problem domain or design domain

- Primitiveness: Indicates the degree to which the operation is atomic

- Similarity: Indicates similarity between two or more classes in terms of their structure, function, behavior, or purpose

- Volatility: Defined as the probability of occurrence of change in the OO design

- Size: Defined with the help of four different views, namely, population, volume, length, and functionality. Population is measured by calculating the total number of OO entities, which can be in the form of classes or operations. Volume measures are collected dynamically at any given point of time. Length is a measure of interconnected designs such as depth of inheritance tree. Functionality indicates the value rendered to the user by the OO application.

Metrics for Coding

Halstead proposed the first analytic laws for computer science by using a set of primitive measures, which can be derived once the design phase is complete and code is generated. These measures are listed below.

nl = number of distinct operators in a program

n2 = number of distinct operands in a program

N1 = total number of operators

N2= total number of operands.

By using these measures, Halstead developed an expression for overall program length, program volume, program difficulty, development effort, and so on.

Program length (N) can be calculated by using the following equation.

N = n1log2nl + n2 log2n2.

Program volume (V) can be calculated by using the following equation.

V = N log2 (n1+n2).

Note that program volume depends on the programming language used and represents the volume of information (in bits) required to specify a program. Volume ratio (L)can be calculated by using the following equation.

L = Volume of the most compact form of a program

Volume of the actual program

Where, value of L must be less than 1. Volume ratio can also be calculated by using the following equation.

L = (2/n1)* (n2/N2).

Program difficulty level (D) and effort (E)can be calculated by using the following equations.

D = (n1/2)*(N2/n2).

E = D * V.

Metrics for Software Testing

Majority of the metrics used for testing focus on testing process rather than the technical characteristics of test. Generally, testers use metrics for analysis, design, and coding to guide them in design and execution of test cases.

Function point can be effectively used to estimate testing effort. Various characteristics like errors discovered, number of test cases needed, testing effort, and so on can be determined by estimating the number of function points in the current project and comparing them with any previous project.

Metrics used for architectural design can be used to indicate how integration testing can be carried out. In addition, cyclomatic complexity can be used effectively as a metric in the basis-path testing to determine the number of test cases needed.

Halstead measures can be used to derive metrics for testing effort. By using program volume (V) and program level (PL),Halstead effort (e)can be calculated by the following equations.

e = V/ PL

Where

PL = 1/ [(n1/2) * (N2/n2)] … (1)

For a particular module (z), the percentage of overall testing effort allocated can be calculated by the following equation.

Percentage of testing effort (z) = e(z)/∑e(i)

Where, e(z) is calculated for module z with the help of equation (1). Summation in the denominator is the sum of Halstead effort (e) in all the modules of the system.

For developing metrics for object-oriented (OO) testing, different types of design metrics that have a direct impact on the testability of object-oriented system are considered. While developing metrics for OO testing, inheritance and encapsulation are also considered. A set of metrics proposed for OO testing is listed below.

- Lack of cohesion in methods (LCOM): This indicates the number of states to be tested. LCOM indicates the number of methods that access one or more same attributes. The value of LCOM is 0, if no methods access the same attributes. As the value of LCOM increases, more states need to be tested.

- Percent public and protected (PAP): This shows the number of class attributes, which are public or protected. Probability of adverse effects among classes increases with increase in value of PAP as public and protected attributes lead to potentially higher coupling.

- Public access to data members (PAD): This shows the number of classes that can access attributes of another class. Adverse effects among classes increase as the value of PAD increases.

- Number of root classes (NOR): This specifies the number of different class hierarchies, which are described in the design model. Testing effort increases with increase in NOR.

- Fan-in (FIN): This indicates multiple inheritances. If value of FIN is greater than 1, it indicates that the class inherits its attributes and operations from many root classes. Note that this situation (where FIN> 1) should be avoided.

Metrics for Software Maintenance

For the maintenance activities, metrics have been designed explicitly. IEEE have proposed Software Maturity Index (SMI), which provides indications relating to the stability of software product. For calculating SMI, following parameters are considered.

- Number of modules in current release (MT)

- Number of modules that have been changed in the current release (Fe)

- Number of modules that have been added in the current release (Fa)

- Number of modules that have been deleted from the current release (Fd)

Once all the parameters are known, SMI can be calculated by using the following equation.

SMI = [MT– (Fa+ Fe + Fd)]/MT.

Note that a product begins to stabilize as 8MI reaches 1.0. SMI can also be used as a metric for planning software maintenance activities by developing empirical models in order to know the effort required for maintenance.

Project Metrics

Project metrics enable the project managers to assess current projects, track potential risks, identify problem areas, adjust workflow, and evaluate the project team’s ability to control the quality of work products. Note that project metrics are used for tactical purposes rather than strategic purposes used by the process metrics.

Project metrics serve two purposes. One, they help to minimize the development schedule by making necessary adjustments in order to avoid delays and alleviate potential risks and problems. Two, these metrics are used to assess the product quality on a regular basis-and modify the technical issues if required. As the quality of the project improves, the number of errors and defects are reduced, which in turn leads to a decrease in the overall cost of a software project.

Often, the first application of project metrics occurs during estimation. Here, metrics collected from previous projects act as a base from which effort and time estimates for the current project are calculated. As the project proceeds, original estimates of effort and time are compared with the new measures of effort and time. This comparison helps the project manager to monitor (supervise) and control the progress of the project.

As the process of development proceeds, project metrics are used to track the errors detected during each development phase. For example, as software evolves from design to coding, project metrics are collected to assess quality of the design and obtain indicators that in turn affect the approach chosen for coding and testing. Also, project metrics are used to measure production rate, which is measured in terms of models developed, function points, and delivered lines of code.

Dinesh Thakur holds an B.C.A, MCDBA, MCSD certifications. Dinesh authors the hugely popular

Dinesh Thakur holds an B.C.A, MCDBA, MCSD certifications. Dinesh authors the hugely popular