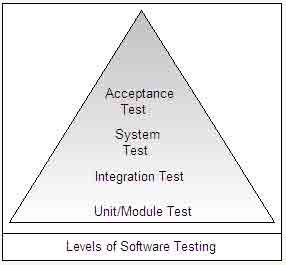

The software is tested at different levels. Initially, the individual units are tested arid once they are tested, they are integrated and checked for interfaces established between them. After this, the entire software is tested to ensure that the output produced is according to user requirements. There are four levels of software testing, namely, unit testing, integration testing, system testing, and acceptance testing.

We’ll be covering the following topics in this tutorial:

Unit Testing

Unit testing is performed to test the individual units of software. Since the software comprises various units/modules, detecting errors in these units is simple and consumes less time, as they are small in size. However, it is possible that the outputs produced by one unit become input for another unit. Hence, if incorrect output produced by one unit is provided as input to the second unit then it also produces wrong output. If this process is not corrected, the entire software may produce unexpected outputs. To avoid this, all the units in the software are tested independently using unit testing.

Unit testing is not just performed once during the software development, but repeated whenever the software is modified or used in a new environment. Some other points noted about unit testing are listed below.

- Each unit is tested separately regardless of other units of software.

- The developers themselves perform this testing.

- The methods of white box testing are used in this testing.

Unit testing is used to verify the code produced during software coding and is responsible for assessing the correctness of a particular unit of source code. In addition, unit testing performs the following functions.

- It tests all control paths to uncover maximum errors that occur during the execution of conditions present in the unit being tested.

- It ensures that all statements in the unit have been executed at least once.

- It tests data structures (like stacks, queues) that represent relationships among individual data elements.

- It checks the range of inputs given to units. This is because every input range has a maximum and minimum value and the input given should be within the range of these values.

- It ensures that the data entered in variables is of the same data type as defined in the unit.

- It checks all arithmetic calculations present in the unit with all possible combinations of input values.

Unit testing is performed by conducting a number of unit tests where each unit test checks an individual component that is either new or modified. A unit test is also referred to as a module test as it examines the individual units of code that constitute the program and eventually the software. In a conventional structured programming language such as C, the basic unit is a function or subroutine while in object-oriented language such as C++, the basic unit is a class.

Various tests that are performed as a part of unit tests are listed below.

- Module interface: This is tested to check whether information flows in a proper manner in and out of the ‘unit’ being tested. Note that test of data-flow (across a module interface) is required before any other test is initiated.

- Local data structure: This is tested to check whether temporarily stored data maintains its integrity while an algorithm is being executed.

- Boundary conditions: These are tested to check whether the module provides the desired functionality within the specified boundaries.

- Independent paths: These are tested to check whether all statements in a module are executed at least once. Note that in this testing, the entire control structure should be exercised.

- Error-handling paths: After successful completion of various tests, error handling paths are tested.

Various unit test cases are generated to perform unit testing. Test cases are designed to uncover errors that occur due to erroneous computations, incorrect comparisons, and improper control flow. A proper unit test case ensures that unit testing is performed efficiently. To develop test cases, the following points should always be considered.

- Expected functionality: A test case is created for testing all functionalities present in the unit being tested. For example, an SQL query that creates Table_A and alters Table_B is used. Then, a test case is developed to make sure that Table_A is created and Table_B is altered.

- Input values: Test cases are developed to check various aspects of inputs, which are discussed here.

- Every input value: A test case is developed to check every input value, which is accepted by the unit being tested. For example, if a program is developed to print a table of five, then a test case is developed which verifies that only five is entered as input.

- Validation of input: Before executing software, it is important to verify whether all inputs are valid. For this purpose, a test case is developed which verifies the validation of all inputs. For example, if a numeric field accepts only positive values, then a test case is developed to verify that the numeric field is accepting only positive values.

- Boundary conditions: Generally, software fails at the boundaries of input domain (maximum and minimum value of the input domain). Thus, a test case is developed, which is capable of detecting errors that caused the software to fail at the boundaries of input domain. For example, errors may occur while processing the last element of an array. In this case, a test case is developed to check if an error occurs while processing the last element of the array.

- Limitation of data types: Variable that holds data types has certain limitations. For example, if a variable with data type long is executed then a test case is developed to ensure that the input entered for the variable is within the acceptable limit of long data type.

- Output values: A test case is designed to determine whether the desired output is produced by the unit. For example, when two numbers, ‘2’ and ‘3’ are entered as input in a program that multiplies two numbers, a test case is developed to verify that the program produces the correct output value, that is, ‘6’.

- Path coverage: There can be many conditions specified in a unit. For executing all these conditions, many paths have to be traversed. For example, when a unit consists of tested ‘if statements and all of them are to be executed, then a test case is developed to check whether all these paths are traversed.

- Assumptions: For a unit to execute properly, certain assumptions are made. Test cases are developed by considering these assumptions. For example, a test case is developed to determine whether the unit generates errors in case the assumptions are not met.

- Abnormal terminations: A test case is developed to check the behavior of a unit in case of abnormal termination. For example, when a power cut results in termination of a program due to shutting down of the computer, a test case is developed to check the behavior of a unit as a result of abnormal termination of the program.

- Error messages: Error messages that appear when the software is executed should be short, precise, self-explanatory, and free from grammatical mistakes. For example, if ‘print’ command is given when a printer is not installed, error message that appears should be ‘Printer not installed’ instead of ‘Problem has occurred as no printer is installed and hence unable to print’. In this case, a test case is developed to check whether the error message displayed is according to the condition occurring in the software.

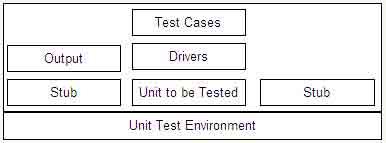

Unit tests can be designed before coding begins or after the code is developed. Review of this design information guides the creation of test cases, which are used to detect errors in various units. Since a component is not an independent program, the drivers and/ or stubs are used to test the units independently. Driver is a module that takes input from test case, passes this input to the unit to be tested and prints the output produced. Stub is a module that works as a unit referenced by the unit being tested. It uses the interface of the subordinate unit, does minimum data manipulation, and returns control back to the unit being tested.

Note: Drivers and stubs are not delivered with the final software product. Thus, they represent an overhead. |

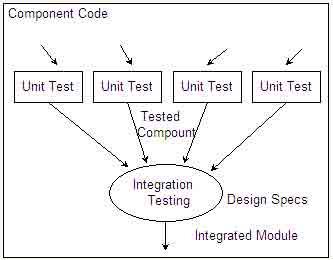

Integration Testing

Once unit testing is complete, integration testing begins. In integration testing, the units validated during unit testing are combined to form a subsystem. The integration testing is aimed at ensuring that all the modules work properly as per the user requirements when they are put together (that is, integrated).

The objective of integration testing is to take all the tested individual modules, integrate them, test them again, and develop the software, which is according to design specifications. Some other points that are noted about integration testing are listed below.

- It ensures that all modules work together properly and transfer accurate data across their interfaces.

- It is performed with an intention to uncover errors that lie in the interfaces among the integrated components.

- It tests those components that are new or have been modified or affected due to a change.

The big bang approach and incremental integration approach are used to integrate modules of a program. In the big bang approach, initially all modules are integrated and then the entire program is tested. However, when the entire program is tested, it is possible that a set of errors is detected. It is difficult to correct these errors since it is difficult to isolate the exact cause of the errors when the program is very large. In addition, when one set of errors is corrected, new sets of errors arise and this process continues indefinitely.

To overcome this problem, incremental integration is followed. The incremental integration approach tests program in small increments. It is easier to detect errors in this approach because only a small segment of software code is tested at a given instance of time. Moreover, interfaces can be tested completely if this approach is used. Various kinds of approaches are used for performing incremental integration testing, namely, top-down integration testing, bottom-up integration testing, regression testing, and smoke testing.

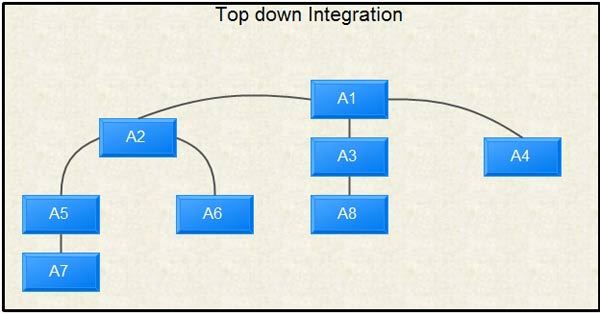

In this testing, the software is developed and tested by integrating the individual modules, moving downwards in the control hierarchy. In top-down integration testing, initially only one module known as the main control module is tested. After this, all the modules called by it are combined with it and tested. This process continues till all the modules in the software are integrated and tested.

It is also possible that a module being tested calls some of its subordinate modules. To simulate the activity of these subordinate modules, a stub is written. Stub replaces modules that are subordinate to the module being tested. Once the control is passed to the stub, it manipulates the data as least as possible, verifies the entry, and passes the control back to the module under test. To perform top-down integration testing, the following steps are used.

- The main control module is used as a test driver and all the modules that are directly subordinate to the main control module are replaced with stubs.

- The subordinate stubs are then replaced with actual modules, one stub at a time. The way of replacing stubs with modules depends on the approach (depth first or breadth first) used for integration.

- As each new module is integrated, tests are conducted.

- After each set of tests is complete, its time to replace another stub with actual module.

- In order to ensure no new errors have been introduced, regression testing may be performed.

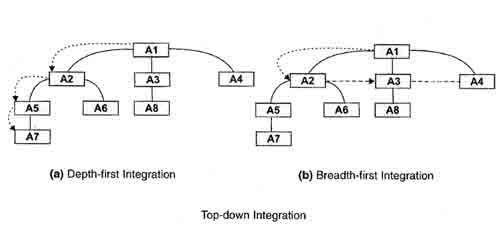

Top-down integration testing uses either depth-first integration or breadth-first integration for integrating the modules. In depth-first integration, the modules are integrated starting from left and then move down in the control hierarchy. Initially, modules A1, A2, A5 and A7 are integrated. Then, module A6 integrates with module A2. After this, control moves to the modules present at the centre of control hierarchy, that is, module A3integrates with module Al and then module A8 integrates with module A3. Finally, the control moves towards right, integrating module A4 with module A1.

In breadth-first integration, initially all modules at the first level are integrated moving downwards, integrating all modules at the next lower levels. Initially modules A2, A3, and A4 are integrated with module Al and then it moves down integrating modules A5 and A6with module A2and module A8 with module A3. Finally, module A7 is integrated with module A5.

Various advantages and disadvantages associated with top-down integration are listed in Table.

Table Advantages and Disadvantages of Top-down Integration

Advantages | Disadvantages |

|

|

In this testing, individual modules are integrated starting from the bottom and then moving upwards in the hierarchy. That is, bottom-up integration testing combines and tests the modules present at the lower levels proceeding towards the modules present at higher levels of the control hierarchy.

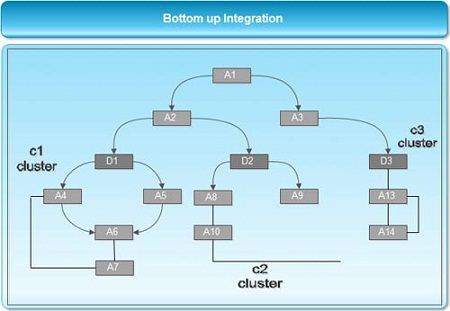

Some of the low-level modules present in software are integrated to form clusters or builds (collection of modules). A test driver that coordinates the test case input and output is written and the clusters are tested. After the clusters have been tested, the test drivers are removed and the clusters are integrated, moving upwards in the control hierarchy.

The low-level modules A4, A5, A6, andA7 are combined to form cluster C1. Similarly, modulesA8, A9, A10, A11, andA12 are combined to form cluster C2. Finally, modules A13and A14are combined to form cluster C3. After clusters are formed, drivers are developed to test these clusters. Drivers Dl, D2, and D3 test clusters C1, C2, and C3 respectively. Once these clusters are tested, drivers are removed and clusters are integrated with the modules. Cluster el and cluster C2 are integrated with module A2. Similarly, cluster C3 is integrated with module A3. Finally, both the modules A2and A3are integrated with module Al.

Various advantages and disadvantages associated with bottom-up integration are listed in Table.

Table Advantages and Disadvantages of Bottom-up Integration

Advantages | Disadvantages |

|

|

Software undergoes changes every time a new module is integrated with the existing subsystem. Changes can occur in the control logic or input/output media, and so on. It is possible that new data-flow paths are established as a result of these changes, which may cause problems in the functioning of some parts of the software that was previously working perfectly. In addition, it is also possible that new errors may surface during the process of correcting existing errors. To avoid these problems, regression testing is used.

Regression testing ‘re-tests’ the software or part of it to ensure that the components, features, and functions, which were previously working properly, do not fail as a result of the error correction process and integration of modules. It is regarded as an important activity as it helps in ensuring that changes (due to error correction or any other reason) do not result in additional errors or unexpected outputs from other system components.

To understand the need of regression testing, suppose an existing module has been modified or a new function is added to the software. These changes may result in errors in other modules of the software that were previously working properly. To illustrate this, consider the part of the code written below that is working properly.

x: = b + 1;

proc (z);

b: = x + 2; x: = 3;

Now suppose that in an attempt to optimize the code, it is transformed into the following.

proc (z);

b: = b + 3;

x: = 3;

This may result in an error if procedure proc accesses variable x. Thus, testing should be organized with the purpose of verifying possible degradations of correctness or other qualities due to later modifications. During regression testing, a subset of already defined test cases is re-executed on the changed software so that errors can be detected. Test cases for regression testing consist of three different types of tests, which are listed below.

- Tests that check all functions of the software.

- Tests that check the functions that can be affected due to changes.

- Tests that check the modified software modules.

Various advantages and disadvantages associated with regression testing are listed in Table.

Table Advantages and Disadvantages of Regression Testing

Advantages | Disadvantages |

|

|

Smoke testing is defined as an approach of integration testing in which a subset of test cases designed to check the main functionality of software are used to test whether the vital functions of the software work correctly. This testing is best suitable for testing time-critical software as it permits the testers to evaluate the software frequently.

Smoke testing is performed when the software is finder development. As the modules of the software are developed, they are integrated to form a ‘cluster’. After the cluster is formed, certain tests are designed to detect errors that prevent the cluster to perform its function. Next, the cluster is integrated with other clusters thereby leading to the development of the entire software, which is smoke tested frequently. A smoke test should possess the following characteristics.

- It should run quickly.

- It should try to cover a large part of the software and if possible the entire software.

- It should be easy for testers to perform smoke testing on the software.

- It should be able to detect all errors present in the cluster being tested.

- It should try to find showstopper errors.

Generally, smoke testing is conducted every time a new cluster is developed and integrated with the existing cluster. Smoke testing takes minimum time to detect errors that occur due to integration of clusters. This reduces the risk associated with the occurrence of problems such as introduction of new errors in the software. A cluster cannot be sent for further testing unless smoke testing is performed on it. Thus, smoke testing determines whether the cluster is suitable to be sent for further testing. Other benefits associated with smoke testing are listed below.

- Minimizes the risks, which are caused due to integration of different modules: Since smoke testing is performed frequently on the software, it allowsthe testers to uncover errors as early as possible, thereby reducing the chanceof causing severe impact on the schedule when there is delay in uncoveringerrors.

- Improves quality of the final software: Since smoke testing detects both functional and architectural errors as early as possible, they are corrected early, thereby resulting in a high-quality software.

- Simplifies detection and correction of errors: As smoke testing is performed almost every time a new code is added, it becomes clear that the probable cause of errors is the new code.

- Assesses progress easily: Since smoke testing is performed frequently, it keeps track of the continuous integration of modules, that is, the progress of software development. This boosts the morale of software developers.

Integration Test Documentation

To understand the overall procedure of software integration, a document known as test specification is prepared. This document provides information in the form of a test plan, test procedure, and actual test results. The test specification document comprises the following sections.

- Scope of testing: Outlines the specific design, functional, and performance characteristics of the software that need to be tested. In addition, it describes the completion criteria for each test phase and keeps track of the constraints that occur in the schedule.

- Test plan: Describes the testing strategy to be used for integrating the software. Testing is classified into two parts, namely, phases and builds. Phases describe distinct tasks that involve various subtasks. On the other hand, builds are groups of modules that correspond to each phase. Some of the common test phases that require integration testing include user interaction, data manipulation and analysis, display outputs, database management, and so on. Every test phase consists of a functional category within the software. Generally, these phases can be related to a specific domain within the architecture of the software. The criteria commonly considered for all test phases include interface integrity, functional validity, information content, and performance.

In addition to test phases and builds, a test plan should also include the following.

- A schedule for integration, which specifies the 0tart and end date for each phase.

- A description of overhead software that focuses on the characteristics for which extra effort may be required.

- A description of the environment and resources required for testing.

- Test procedure ‘n’: Describes the order of integration and the corresponding unit tests for modules. Order of integration provides information about the purpose and the modules that are to be tested. Unit tests are performed for the developed modules along with the description of tests for these modules. In addition, test procedure describes the development of overhead software, expected results during integration testing, and description of test case data. The test environment and tools or techniques used for testing are also mentioned in a test procedure.

- Actual test results: Provides information about actual test results and problems that are recorded in the test report. With the help of this information, it is easy to carry out software maintenance.

- References: Describes the list of references that are used for preparing user documentation. Generally, references include books and websites.

- Appendices: Provides information about the test specification document. Appendices serve as a supplementary material that is provided at the end of the document.

System Testing

Software is integrated with other elements such as hardware, people, and database to form a computer-based system. This system is then checked for errors using system testing. IEEE defines system testing as ‘a testing conducted on a complete, integrated system to evaluate the system’s compliance with its specified requirement.’

In system testing, the system is tested against non-functional requirements such as accuracy, reliability, and speed. The main purpose is to validate and verify the functional design specifications and to check how integrated modules work together. The system testing also evaluates the system’s interfaces to other applications and utilities as well as the operating environment.

During system testing, associations between objects (like fields), control and infrastructure, and the compatibility of the earlier released software versions with new versions are tested. System testing also tests some properties of the developed software, which are essential for users. These properties are listed below.

- Usable: Verifies that the developed software is easy to use and is understandable

- Secure: Verifies that access to important or sensitive data is restricted even for those individuals who have authority to use the software

- Compatible: Verifies that the developed software works correctly in conjunction with existing data, software and procedures

- Documented: Verifies that manuals that give information about the developed software are complete, accurate and understandable

- Recoverable: Verifies that there are adequate methods for recovery in case of failure.

System testing requires a series of tests to be conducted because software is only a component of computer-based system and finally it is to be integrated with other components such as information, people, and hardware. The test plan plays an important role in system testing as it describes the set of test cases to be executed, the order of performing different tests, and the required documentation for each test. During any test, if a defect or error is found, all the system tests that have already been executed must be re-executed after the repair has been made. This is required to ensure that the changes made during error correction do not lead to other problems.

While performing system testing, conformance tests and reviews can also be conducted to check the conformance of the application (in terms of inter operability, compliance, and portability) with corporate or industry standards.

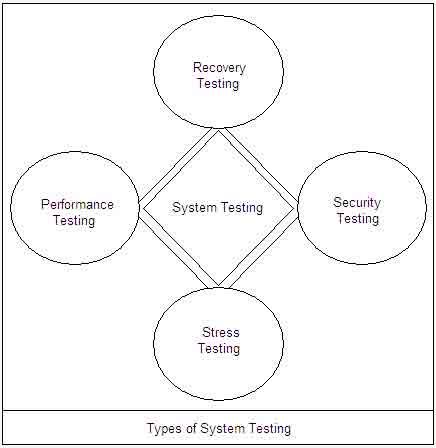

System testing is considered to be complete when the outputs produced by the software and the outputs expected by the user are either in line or the difference between the two is within permissible range specified by the user. Various kinds of testing performed as a part of system testing are recovery testing, security testing, stress testing, and performance testing.

Recovery testing is a type of system testing in which the system is forced to fail in different ways to check whether the software recovers from the failures without any data loss. The events that lead to failure include system crashes, hardware failures, unexpected loss of communication, and other catastrophic problems.

To recover from any type of failure, a system should be fault-tolerant. A fault tolerant system can be defined as a system, which continues to perform the intended functions even when errors are present in it. In case the system is not fault-tolerant, it needs to be corrected within a specified time limit after failure has occurred so that the software performs its functions in a desired manner.

Test cases generated for recovery testing not only show the presence of errors in a system, but also provide information about the data lost due to problems such as power failure and improper shutting down of computer system. Recovery testing also ensures that appropriate methods are used to restore the lost data. Other advantages of recovery testing are listed below.

- It checks whether the backup data is saved properly.

- It ensures that the backup data is stored in a secure location.

- It ensures that proper detail of recovery procedures is maintained.

Systems with sensitive information are generally the target of improper or illegal use. Therefore, protection mechanisms are required to restrict unauthorized access to the system. To avoid any improper usage, security testing is performed which identifies and removes the flaws from software (if any) that can be exploited by the intruders and thus, result in security violations. To find such kind of flaws, the tester like an intruder tries to penetrate the system by performing tasks such as cracking the password, attacking the system with custom software, intentionally producing errors in the system, etc. The security testing focuses on the following areas of security.

- Application security: To check whether the user can access only those data and functions for which the system developer or user of system has given permission. This security is referred to as authorization.

- System security: To check whether only the users, who have permission to access the system, are accessing it. This security is referred to as authentication.

Generally, the disgruntled/dishonest employees or other individuals outside the organization make an attempt to gain unauthorized access to the system. If such people succeed in gaining access to the system, there is a possibility that a large amount of important data can be lost resulting in huge loss to the organization or individuals.

Security testing verifies that the system accomplishes all the security requirements and validates the effectiveness of these security measures. Other advantages associated with security testing are listed below.

- It determines whether proper techniques are used to identify security risks.

- It verifies that appropriate protection techniques are followed to secure the system.

- It ensures that the system is able to protect its data and maintain its functionality.

- It conducts tests to ensure that the implemented security measures are working properly.

Stress Testing

Stress testing is designed to determine the behavior of the software under abnormal situations. In this testing, the test cases are designed to execute the system in such a way that abnormal conditions arise. Some examples of test cases that may be designed for stress testing are listed below.

- Test cases that generate interrupts at a much higher rate than the average rate

- Test cases that demand excessive use of memory as well as other resources

- Test cases that cause ‘thrashing’ by causing excessive disk accessing.

IEEE defines stress testing as ‘testing conducted to evaluate a system or component at or beyond the limits of its specified requirements.’ For example, if a software system is developed to execute 100 statements at a time, then stress testing may generate 110 statements to be executed. This load may increase until the software fails. Thus, stress testing specifies the way in which a system reacts when it is made to operate beyond its performance and capacity limits. Some other advantages associated with stress testing are listed below.

- It indicates the expected behavior of a system when it reaches the extreme level of its capacity.

- It executes a system till it fails. This enables the testers to determine the difference between the expected operating conditions and the failure conditions.

- It determines the part of a system that leads to errors.

- It determines the amount of load that causes a system to fail.

- It evaluates a system at or beyond its specified limits of performance.

Performance Testing

Performance testing is designed to determine the performance of the software (especially real-time and embedded systems) at the run-time in the context of the entire computer-based system. It takes various performance factors like load, volume, and response time of the system into consideration and ensures that they are in accordance with the specifications. It also determines and informs the software developer about the current performance of the software under various parameters (like condition to complete the software within a specified time limit).

Often performance tests and stress tests are used together and require both software and hardware instrumentation of the system. By instrumenting a system, the tester can reveal conditions that may result in performance degradation or even failure of a system. While the performance tests are designed to assess the throughput, memory usage, response time, execution time, and device utilization of a system, the stress tests are designed to assess its robustness and error handling capabilities. Performance testing is used to test several factors that play an important role in improving the overall performance of the system. Some of these factors are listed below.

- Speed: Refers to the extent how quickly a system is able to respond to its users. Performance testing verifies whether the response is quick enough.

- Scalability: Refers to the extent to which the system is able to handle the load given to it. Performance testing verifies whether the system is able to handle the load expected by users.

- Stability: Refers to the extent how long the system is able to prevent itself from failure. Performance testing verifies whether the system remains stable under expected and unexpected loads.

The outputs produced during performance testing are provided to the system developer. Based on these outputs, the developer makes changes to the system in order to remove the errors. This testing also checks the system characteristics such as its reliability. Other advantages associated with performance testing are listed below.

- It assess whether a component or system complies with specified performance requirements.

- It compares different systems to determine which system performs better.

Acceptance Testing

Acceptance testing is performed to ensure that the functional, behavioral, and performance requirements of the software are met. IEEE defines acceptance testing as a ‘formal testing with respect to user needs, requirements, and business processes conducted to determine whether or not a system satisfies the acceptance criteria and to enable the user, customers or other authorized entity to determine whether or not to accept the system.’

During acceptance testing, the software is tested and evaluated by a group of users either at the developer’s site or user’s site. This enables the users to test the software themselves and analyze whether it is meeting their requirements. To perform acceptance testing, a predetermined set of data is given to the software as input. It is important to know the expected output before performing acceptance testing so that outputs produced by the software as a result of testing can be compared with them. Based on the results of tests, users decide whether to accept or reject the software. That is, if both outputs (expected and produced) match, the software is considered to be correct and is accepted; otherwise, it is rejected.

Various advantages and disadvantages associated with acceptance testing are listed in Table.

Table Advantages and Disadvantages of Acceptance Testing

Advantages | Disadvantages |

|

|

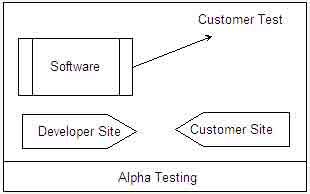

Since the software is intended for large number of users, it is not possible to perform acceptance testing with all the users. Therefore, organizations engaged in software development use alpha and beta testing as a process to detect errors by allowing a limited number of users to test the software.

Alpha testing is considered as a form of internal acceptance testing in which the users test the software at the developer’s site. In other words, this testing assesses the performance of the software in the environment in which it is developed. On completion of alpha testing, users report the errors to software developers so that they can correct them.

Some advantages of alpha testing are listed below.

- It identifies all the errors present in the software.

- It checks whether all the functions mentioned m the requirements are implemented properly in the software.

Beta Testing

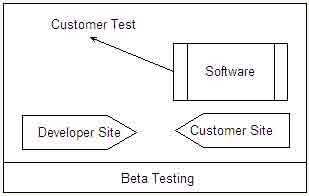

Beta testing assesses the performance of the software at user’s site. This testing is ‘live’ testing and is conducted in an environment, which is not controlled by the developer. That is, this testing is performed without any interference from the developer. Beta testing is performed to know whether the developed software satisfies user requirements and fits within the business processes.

Note that beta testing is considered as external acceptance testing which aims to get feedback from the potential customers. For this, the system and the limited public tests (known as beta versions) are made available to the groups of people or the open public (forgetting more feedback). These people test the software to detect any faults or bugs that may not have been detected by the developers and report their feedback. After acquiring the feedback, the system is modified and released either for sale or for further beta testing.

Some advantages of beta testing are listed below.

- It evaluates the entire documentation of the software. For example, it examines the detailed description of software code, which forms a part of documentation of the software.

- It checks whether the software is operating successfully in user environment.

Dinesh Thakur holds an B.C.A, MCDBA, MCSD certifications. Dinesh authors the hugely popular

Dinesh Thakur holds an B.C.A, MCDBA, MCSD certifications. Dinesh authors the hugely popular