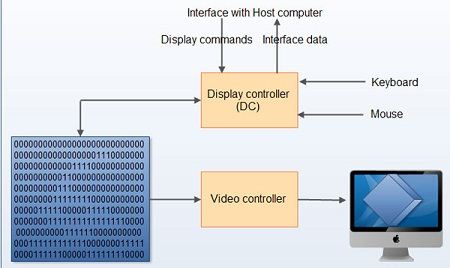

An EXPANSION CARD that enables a personal computer to create a graphical display. The term harks back to the original 1981 IBMPC which could display only text, and required such an optional extra card to ‘adapt’ it to display graphics.

The succession of graphics adapter standards that IBM produced throughout the 1980s (CGA,EGA,VGA)both defined and confined the graphics capability of personal computers until the arrival of IBM-compatibles and the graphical Windows operating system, which spawned a whole industry manufacturing graphics adapters with higher capabilities.

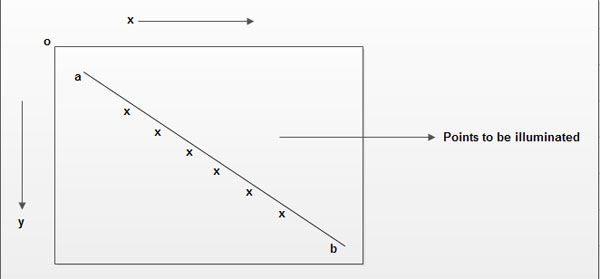

Nowadays most graphics adapters are also powerful GRAPHICS ACCELERATOR capable of displaying 24-bit colour at resolutions of 1280 x 1024 pixels or better. Providing software support for the plethora of different makes of adapter has become an onerous task for software manufacturers.