To avoid the problems of early systems the batch processing systems were introduced. The problem of early systems was more setup time. So the problem of more set up time was reduced by processing the jobs in batches, known as batch processing system. In this approach similar jobs were submitted to the CPU for processing and were run together.

The main function of a batch processing system is to automatically keep executing the jobs in a batch. This is the important task of a batch processing system i.e. performed by the ‘Batch Monitor’ resided in the low end of main memory.

This technique was possible due to the invention of hard-disk drives and card readers. Now the jobs could be stored on the disk to create the pool of jobs for its execution as a batch. First the pooled jobs are read and executed by the batch monitor, and then these jobs are grouped; placing the identical jobs (jobs with the similar needs) in the same batch, So, in the batch processing system, the batched jobs were executed automatically one after another saving its time by performing the activities (like loading of compiler) only for once. It resulted in improved system utilization due to reduced turn around time.

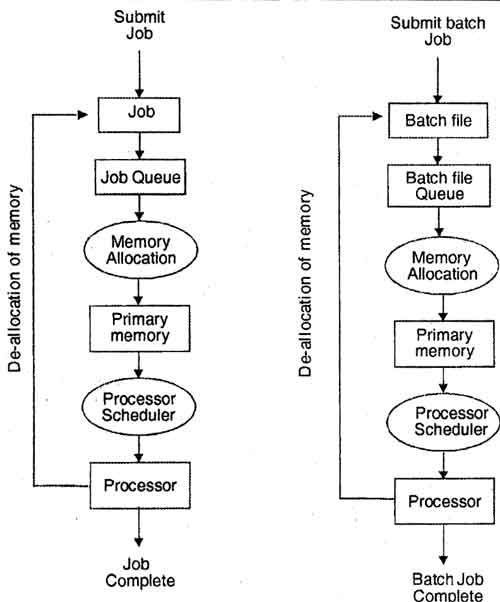

In the early job processing systems, the jobs were placed in a job queue and the memory allocate or managed the primary memory space, when space was available in the main memory, a job was selected from the job queue and was loaded into memory.

Once the job loaded into primary memory, it competes for the processor. When the processor became available, the processor scheduler selects job that was loaded in the memory and execute it.

In batch strategy is implemented to provide a batch file processing. So in this approach files of the similar batch are processed to speed up the task.

Batch File Processing

In batch processing the user were supposed to prepare a program as a deck of punched cards. The header cards in the deck were the “job control” cards which would indicate that which compiler was to be used (like FORTRAN, COBOL compilers etc). The deck of cards would be handed in to an operator who would collect such jobs from various users. Then the submitted jobs were ‘grouped as FORTRAN jobs, COBOL jobs etc. In addition, these jobs were classified as ‘long jobs’ that required more processing time or short jobs which required a short processing time. Each set of jobs was considered as a batch and the processing would be done for a batch. For instance, there maybe a batch of short FORTRAN jobs. The output for each job would be separated and turned over to users in a collection area. So in this approach, files of the similar batch were processed to speed up the task.

In this environment there was no interactivity and the users had no direct control. In this system, only one job could engage the processor at a time and if there was any input/ output operation the processor had to sit idle till the completion of I/O job. So it resulted to the under utilization of CPU time.

In batch processing system, earlier; the jobs were scheduled in the order of their arrival i.e. First Come First Served (FCFS). Even though this scheduling method was easy and simple to implement but unfair for the situations where long jobs are queued ahead of the short jobs. To overcome this problem, another scheduling method named as ‘Shortest Job First’ was used. As memory management is concerned, the main memory was partitioned into two fixed partitions. The lower end of this partition was assigned to the resident portion of the OS i.e. named as Batch Monitor. Whereas, the other partition (higher end) was assigned to the user programs.

Though, it was an improved technique in reducing the system setup time but still there were some limitations with this technique like as under-utilization of CPU time, non-interactivity of user with the running jobs etc. In batch processing system, the jobs of a batch were executed one after another. But while these jobs were performing I/O operations; meantime the CPU was sitting idle resulting to low degree of resource utilization.

Dinesh Thakur holds an B.C.A, MCDBA, MCSD certifications. Dinesh authors the hugely popular

Dinesh Thakur holds an B.C.A, MCDBA, MCSD certifications. Dinesh authors the hugely popular